Fin AI review (2026): Everything support leaders need to know

If AI agents are part of your customer support strategy in 2026, Fin AI is probably on your shortlist. It’s one of the most visible AI agents in the market, with strong reviews and broad adoption — especially among teams already using Intercom. But those signals don’t tell you how an AI agent will perform once it’s embedded in a real support operation.

This guide is written for support leaders evaluating Fin in practice. We’ll look at where Fin delivers meaningful automation, where teams encounter limitations around cost, accuracy, and escalation, and why operational context — not just model quality — ultimately determines whether an AI agent earns a long-term place in your stack.

What is Fin AI?

Fin AI is Intercom’s automated support agent designed to answer customer questions using your existing help content. It draws from your knowledge base, product documentation, and prior support interactions to generate conversational responses inside Intercom’s messenger and help center. The core promise is straightforward: automate a meaningful share of inbound support without rebuilding your content or designing complex workflows.

Unlike traditional chatbots built on scripts and decision trees, Fin is a generative AI system embedded directly within Intercom’s support platform. For teams already operating inside Intercom, it often feels like a natural extension of their existing tooling. For teams outside that ecosystem, Fin functions more like an add-on layer — one whose effectiveness depends heavily on the quality of documentation, the structure of support workflows, and the surrounding tech stack.

In practice, Fin is best understood as a high-visibility automation layer optimized for resolving straightforward support questions. The real question for support leaders is how well that model scales once simple deflection gives way to complexity, edge cases, and operational pressure.

Where Fin AI performs best

Fin has earned its reputation for a reason. For many teams — especially those already running their support operation inside Intercom — it delivers fast, visible automation. In the right environment, value shows up quickly. Here’s where Fin performs most reliably.

It excels at high-volume, repetitive questions

Fin is strongest when customer questions map cleanly to existing help content. Straightforward “how do I…?” requests, policy clarifications, and FAQ-style inquiries are where it consistently performs well. For teams with predictable, repeatable demand, this kind of automation can create immediate breathing room for agents and managers.

It’s deeply embedded in the Intercom ecosystem

Because Fin sits natively inside Intercom, it can draw on help center content, prior conversations, and product documentation without additional integrations. For Intercom-first teams, that built-in context is a major driver of fast time-to-value and smoother rollout compared to standalone AI tools.

It supports multiple channels out of the box

Fin can operate across chat, email, voice, and even customer Slack communities. For teams aiming to deliver a consistent automated experience across channels without deploying separate tools, this breadth is a meaningful advantage.

It performs best when documentation is mature and well-maintained

When knowledge bases are clear, structured, and up to date, Fin typically delivers accurate, conversational responses with relatively little initial tuning. Teams with strong content hygiene often see solid early results.

It reduces operational load in low-complexity environments

In support organizations with simple workflows, limited edge cases, and clean escalation paths, Fin can meaningfully reduce the number of tickets reaching human agents. In those conditions, automation tends to be both effective and easier to manage.

Fin AI pricing: Understanding the cost model in practice

At first glance, Fin’s pricing model appears straightforward. In reality, its cost dynamics depend heavily on how AI resolutions are defined, how demand fluctuates, and how often conversations ultimately require human involvement.

A hybrid pricing model with variable outcomes

Fin combines two cost components:

- Intercom platform fees (seat licenses)

- Per-AI-resolution fees (typically around $0.99 per resolved conversation)

Because charges are tied to AI resolutions rather than total ticket volume, costs increase as Fin engages with more conversations — even in cases where a human agent later steps in. This makes overall spend more sensitive to changes in demand and routing behavior than flat-rate or usage-capped models.

“Resolution” doesn’t always map cleanly to operational impact

Some teams report cases where Fin marks a conversation as resolved even when:

- A human agent intervenes later

- A customer reopens the issue

- The conversation closes without fully addressing the underlying problem

When this happens, resolution-based pricing can diverge from the actual workload removed from agents, making it harder to tie AI spend directly to operational savings.

Demand spikes introduce cost volatility

Teams with seasonal traffic or incident-driven surges often see significant swings in AI-related costs. During outages or product issues — when customers are more likely to need nuanced, human support — Fin may still engage early in the conversation, increasing resolution counts without proportionally reducing agent workload.

For leaders responsible for forecasting and budget control, this variability can complicate planning.

ROI depends on the shape of your demand

Fin’s pricing model tends to work best when:

- A large share of inbound volume is FAQ-driven

- Documentation is stable and well-maintained

- AI resolves issues end-to-end without frequent escalation

It becomes less predictable when demand skews toward complex, multi-step issues or when escalation rates are high. In those environments, teams may pay for AI activity that doesn’t materially reduce operational load.

The takeaway: Value is real, but predictability varies

Fin can deliver meaningful efficiency gains in the right support environment. For teams operating under tight budget controls or highly variable demand, the pricing model introduces an additional layer of forecasting complexity that’s important to account for during evaluation.

How Fin AI performs in real-world support environments

Fin’s promise is simple: resolve a meaningful share of inbound volume so human agents can focus on work that requires judgment and empathy. In practice, performance varies based on product complexity, documentation quality, and how support workflows are structured. Across reviews and conversations with support leaders, a few consistent patterns emerge.

Implementation often extends beyond initial setup

Intercom positions Fin as a fast, low-lift deployment — and that can be true for teams with clean, mature documentation. In many environments, however, rollout involves more ongoing work than expected, including:

- Updating or restructuring help content so the AI can interpret it correctly

- Defining rules, exceptions, and escalation paths

- Iterative tuning of prompts, workflows, and policies

This isn’t unusual for generative AI, but it does mean strong performance is typically the result of sustained operational effort, not a one-time configuration.

Performance is strongest on linear issues, weaker on nuance

Fin performs best when questions map cleanly to a single, well-documented answer. Reliability drops when customer scenarios involve:

- Multi-step troubleshooting

- Decision-based workflows

- Context from prior interactions

- Product-specific edge cases

These limitations aren’t unique to Fin — they’re common across AI agents — but they matter more in environments where accuracy and consistency are critical.

Escalation behavior shapes the customer and agent experience

Fin is designed to hand off to a human when needed, but support leaders report cases where escalation doesn’t happen as expected. Common issues include delayed handoffs, conversational loops, or conversations closing prematurely.

When escalation breaks down, the impact shows up downstream: frustrated customers, missing context for agents, and additional cleanup work that offsets some of the efficiency gains from automation.

Ongoing oversight becomes part of daily operations

As Fin absorbs more volume, teams typically spend more time on:

- Reviewing AI transcripts

- Identifying documentation gaps

- Correcting misunderstandings

- Monitoring regressions after product or policy changes

In high-volume environments, this oversight becomes a recurring operational responsibility rather than a background task.

Tech stack alignment influences long-term results

Fin tends to perform best when Intercom is the system of record for support. Teams running multi-tool environments or using Fin as a standalone layer can still see value, but they often trade native context for additional maintenance and coordination overhead.

Taken together, these patterns highlight a common theme: AI performance is less about raw capability and more about how well the system around it is designed to support automation.

Why AI success starts with operations, not automation

Even the most capable AI agents don’t operate in isolation. They sit inside a system made up of documentation, workflows, escalation paths, staffing models, and real-time demand. When that system is consistent and predictable, AI tools tend to perform well. When it’s complex, dynamic, or fragmented across multiple tools, the limits of an AI-only approach become more visible.

AI can automate a meaningful share of inbound volume, but it doesn’t manage the operational work around those conversations. Maintaining accurate documentation, forecasting blended demand, handling nuanced escalations, and giving agents the right context all remain human responsibilities. As AI absorbs simpler tickets, what’s left for agents is often more complex and more time-sensitive — raising the bar for coordination, visibility, and operational discipline.

This is why the same AI agent can deliver strong results in one environment and struggle in another. Performance isn’t just a function of model quality or training data. It’s shaped by how well automation is integrated into the realities of your support operation — and whether your team has the structure and insight needed to manage a hybrid AI + human workforce.

How Assembled approaches AI differently

Most AI agents are built as point solutions for deflection. They answer questions, route conversations, and — when conditions are right — reduce the number of tickets that reach a human. But support isn’t just a stream of questions to automate. It’s an operating system made up of people, workflows, policies, real-time decisions, and constantly shifting demand.

AI can’t succeed in isolation from that system. Assembled’s approach starts from this reality.

Instead of treating AI as a standalone layer, Assembled integrates autonomous AI agents and agent-assist tools directly into the operational foundation of the support team. The platform is designed for hybrid operations — where AI, humans, and workforce management work together rather than in parallel silos.

AI that’s built into the operation, not bolted on top

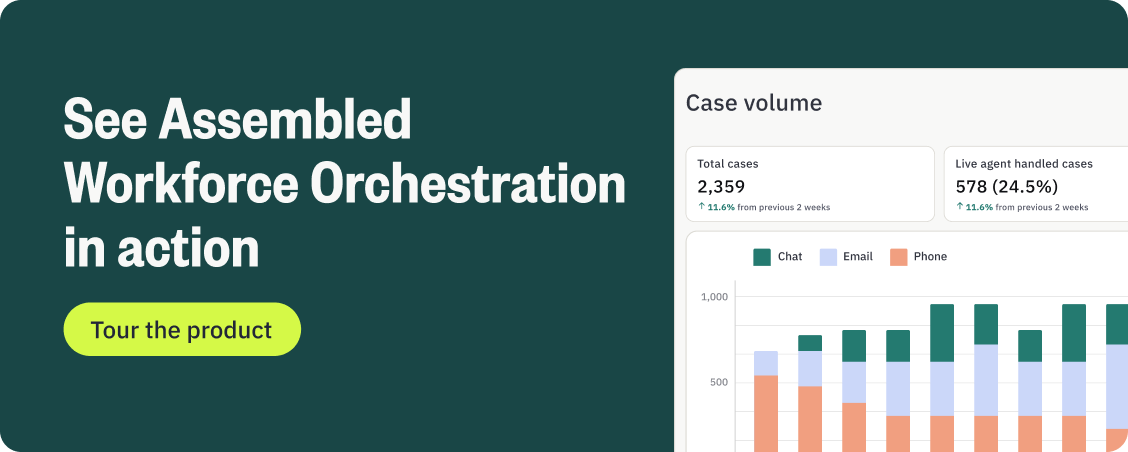

Assembled combines automation with the systems that determine how support actually runs day to day:

- Autonomous AI Agents that resolve cases across chat, voice, and email — or hand off cleanly when human judgment is needed

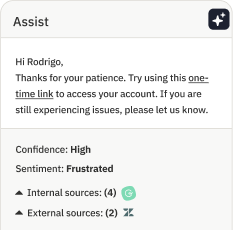

- An AI Copilot that helps agents move faster and stay accurate on complex cases

- Workforce management that plans capacity for both human and AI-handled work

- Forecasting and scheduling built around blended demand across channels

- Real-time operational visibility to manage spikes, escalations, and SLA exposure

The result is a coordinated system where automation isn’t just deployed — it’s governed, measured, and aligned with real operational constraints.

Operational intelligence that shows where AI actually helps

Deploying AI is only half the challenge. Understanding where it delivers value — and where it doesn’t — is what allows teams to scale responsibly.

Assembled gives support leaders visibility into questions like:

- When should AI handle a conversation versus handing it off to a human?

- Where are customers getting stuck, and why?

- How does automation reshape staffing needs across teams and channels?

- Which queues benefit most from AI, and which still require human coverage?

This insight allows teams to model staffing plans that account for both AI capacity and human resources, rather than treating automation as an isolated efficiency lever.

Automation designed for reliability and agent trust

Assembled’s AI is built to handle complete resolutions, not just generate answers. Structured workflows define how issues are resolved — gathering information, executing actions, applying business logic, and escalating when needed — while guardrails prevent low-confidence or policy-violating responses from reaching customers.

When AI hands off or assists, the agent experience is intentionally designed to reduce friction. Copilot features like source attribution, style controls, wrap-up automation, and feedback loops help agents trust the system and improve AI output over time.

This focus on reliability and adoption reduces cleanup work and ensures AI becomes a tool agents rely on — not one they work around.

Implementation that reflects operational reality

Rolling out AI in a complex support environment requires more than access to a model. Assembled provides structured onboarding, deep integrations, and ongoing partnership to help teams adopt automation safely and sustainably — whether the goal is modest deflection or AI handling the majority of volume over time.

The difference comes down to design. Assembled brings AI automation and operational control together in one platform, so teams don’t have to trade efficiency for visibility or reliability.

How to decide if Fin AI belongs on your shortlist

Fin AI can be a strong option in environments with predictable demand, clean documentation, and straightforward escalation paths. For teams operating with more complex workflows, blended staffing models, or tighter requirements around visibility and control, it’s worth evaluating a platform designed for orchestration from the start.

If you’re weighing those tradeoffs, book a demo to see how Assembled approaches AI — and compare how each model performs in the context of your support operation.