Your LLM provider will go down, but you don’t have to

LLM providers are famously unreliable. When writing this article, I took a look at OpenAI and Anthropic's status pages, and they reported 99.80% and 99.58% uptime respectively over recent months. This translates to over 3 hours of potential downtime per month — an eternity when you're powering customer-facing features.

At Assembled, we've adopted Stripe's philosophy: we get to choose our own vendors, so we’re responsible for downtime regardless of which vendor caused the failure.

Before implementing automated fallbacks, we experienced multiple customer-impacting outages. These incidents were particularly frustrating because there wasn't much we could do in real-time other than manually switch models — a process that could take precious minutes during an active outage.

These experiences taught us that reactive fixes weren't enough. We needed an engineering approach that builds resilience into the architecture from the start.

Manual switchovers (and why they don’t work)

Our first attempt at handling provider outages involved on-call engineers manually switching providers during outages with an easily accessible configuration. Though clever, this ultimately failed because:

- Multiple providers per use case: We use a variety of different models and are constantly swapping in new models, so blanket switches broke our nuanced routing.

- Response delays: Even with good procedures, manual switchovers often took several minutes and caused stress for the on-call engineer.

- Poor outage classification: It was hard for humans to quickly distinguish between full outages and transient issues, meaning judgment calls would have to be made for elevated error rates that didn’t take a provider fully down.

The manual approach taught us that we needed automation, but it also revealed the complexity that our automated system would need to handle.

Building automated fallbacks

To combat these problems, we designed a very simple automated fallback system that maintains separate ordering preferences for different model categories. This enabled instant failovers when providers become unavailable.

Model categories

First, we organized our models into categories based on their intended use cases.

Provider ordering and fallback logic

Then we established a global provider ordering that determines our fallback sequence when the primary model fails:

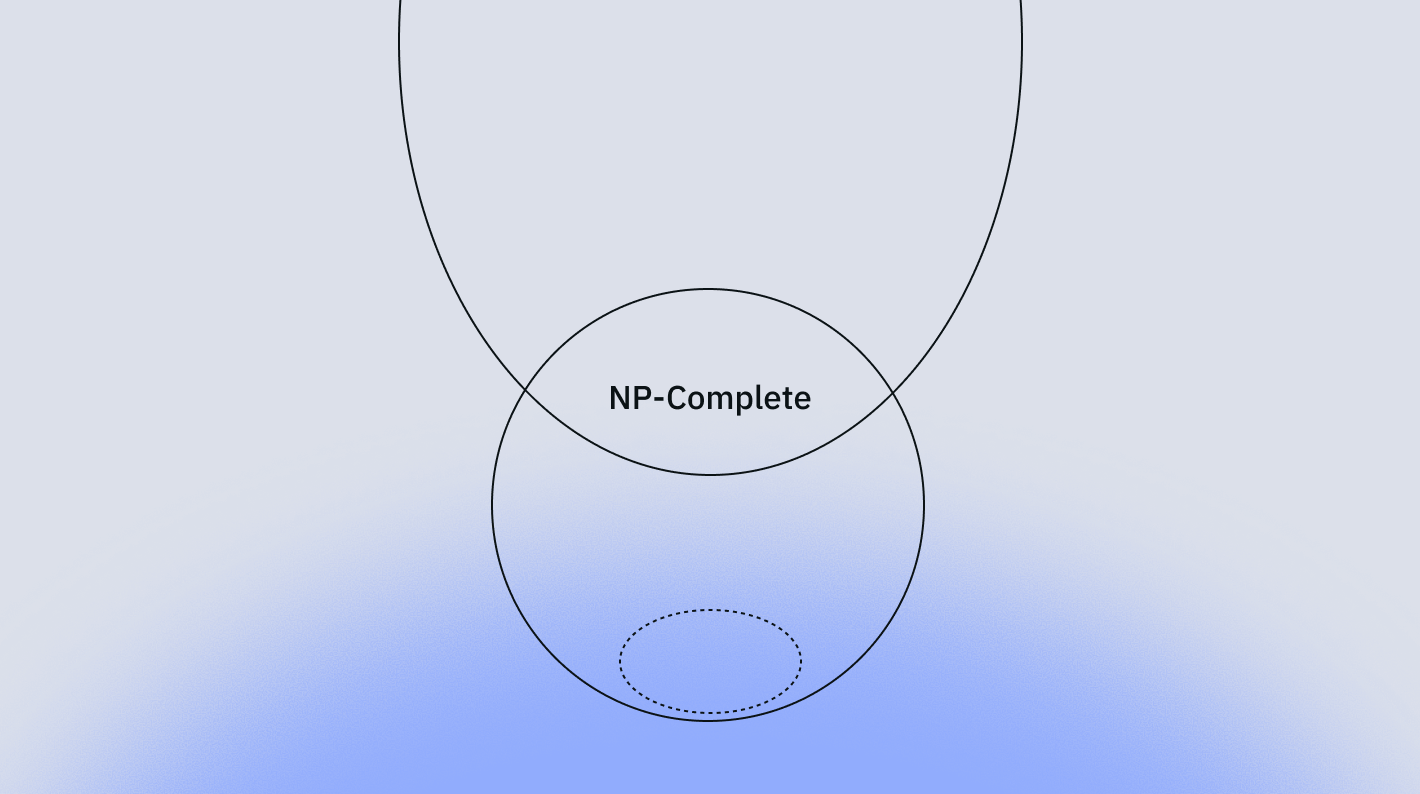

The fallback logic maintains model category consistency across providers. If GPT-4.1-Mini fails, we fall back to Claude 3.5 Haiku (both "Fast" category), then Gemini 2.5 Flash. This ensures users get equivalent capability levels regardless of which provider ultimately handles the request.

We also provided configurable timeouts for each LLM request to cancel a request after a certain amount of time.

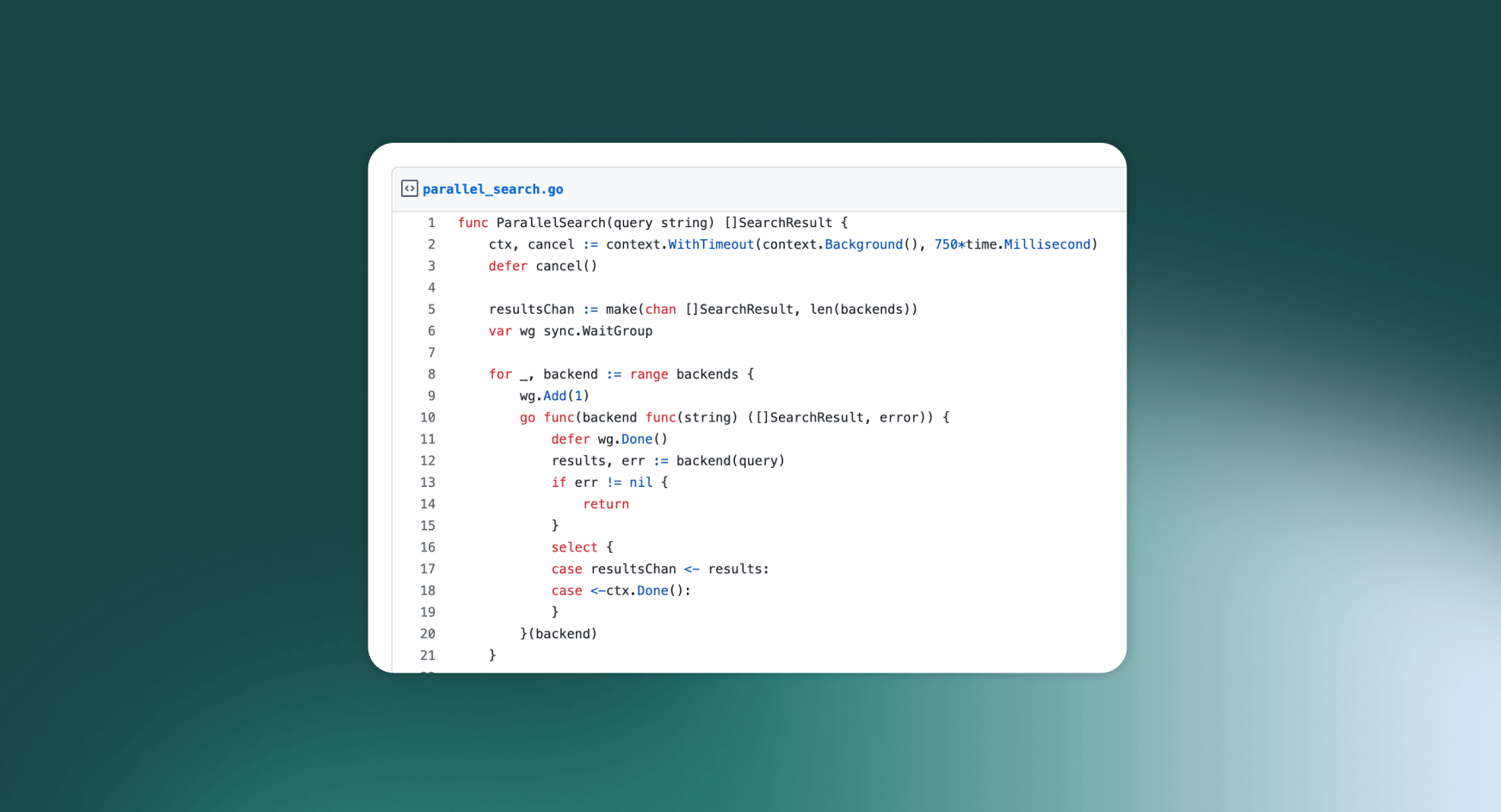

Once we established fallback ordering and categories, the implementation was relatively straightforward: listen for an error or a timeout, then try the next model in that category.

Handling streaming responses

Streaming adds one key complexity: once we start sending tokens to a user, we can't easily retry with a different model since you may be in the middle of a response (and restarting the stream would cause awkward end user results). Luckily, we found that most outages happen before the first token is returned, so we only attempt a fallback if streaming hasn't yet begun:

Benefits of our simple fallback approach

Instant failover: Our system detects failures and switches providers within milliseconds, eliminating the 5+ minute manual switchover delays that previously caused customer-visible outages.

Automatic handling of partial degradations: This benefit surprised us. Many LLM providers experience transient errors where a small but non-trivial percentage of requests fail — maybe 2–5% over a 10-minute window. We'd also see increases in failures grouped together, but it wouldn't be enough for us to justify a manual switchover. These partial degradations are now handled automatically by the same logic that handles full-scale outages.

Hybrid approach for optimization: We still have the ability to perform manual switchovers of our main model provider, but now it's more of a latency or quality optimization rather than an emergency response to keep us operational.

During a recent multi-hour LLM provider outage, customers experienced near-zero impact with request failure rates below 0.001% — all thanks to automated failover. More importantly, we've eliminated the stress and urgency of emergency manual failovers. Our on-call engineers can focus on building new features rather than frantically switching configurations during outages.

The cost of redundancy: More evals

Automated fallbacks significantly improved reliability but introduced new quality challenges. We can no longer evaluate prompts against a single model — we have to ensure consistent quality across the entire fallback chain.

Our evaluation burden increased quite significantly as every prompt change requires evaluation against at least the first two providers in our fallback sequence. This expanded evaluation added 20–30% to our prompt development time.

However, the change has pushed us to invest more in our LLM-as-a-judge tooling so that we can more easily evaluate results across providers in an automated way — something we'll be diving deeper into in an upcoming blog post. Since secondary providers are not hit that often and used as fallbacks, we don’t need a full human evaluation on those fallback providers prompts.

Results and lessons learned

Since implementing automated fallbacks, we've seen dramatic improvements in system reliability:

- 99.97% effective uptime on our AI model responses despite multiple provider outages

- Average failover time reduced from 5+ minutes to hundreds of milliseconds

- Zero manual interventions required during provider outages

The hybrid approach has proved particularly valuable. We maintain the ability to manually adjust provider orderings for performance optimizations, but these changes now enhance our service rather than serve as emergency responses to outages.

Perhaps most importantly, we've learned that treating vendor reliability as a solvable engineering problem (rather than an external dependency we can't control) leads to more robust and customer-friendly solutions. Our customers shouldn't have to care which LLM provider is having issues on any given day. Building reliable systems on top of unreliable dependencies is both good engineering and essential for maintaining customer trust.

We're always working on making our systems more robust and our customer experiences more reliable. We’re working on everything from AI voice agents to knowledge processing pipelines to customer-facing automation tools. If you're interested in helping us solve these kinds of challenges, check out our open roles.