What is Decagon AI? Use cases, limitations, and better alternatives for enterprise support

Decagon is an enterprise AI platform that automates customer support using large language models to handle tickets across chat, email, and voice. Its core pitch is straightforward: replace human agents with autonomous AI that can resolve issues end-to-end.

The appeal is clear for fast-moving teams looking for quick automation. But once operations involve multiple channels, geographies, vendors, and SLAs, the question shifts from “Can it resolve tickets?” to “Can it orchestrate an entire support operation?”

This guide breaks down what Decagon offers, where it fits, where it falls short for complex environments, and how it compares to more complete platforms like Assembled.

What is Decagon AI?

Decagon is an enterprise AI platform that helps support teams automate customer service using large language models (LLMs). Its AI agents can autonomously handle tasks like answering product questions, processing refunds, and canceling subscriptions — helping businesses reduce support costs and scale faster.

Founding and funding

Co-founded in 2023 by Jesse Zhang and Ashwin Sreenivas, Decagon has quickly emerged as a leading player in AI-powered customer service. The company has raised $231 million in funding across four rounds — including a $131 million Series C in mid-2025 led by Andreessen Horowitz and Accel, valuing the company at $1.5 billion.

Growth and product traction

Decagon’s early traction has been driven by its ability to replace outsourced support labor with AI agents that can handle common tasks like technical troubleshooting, order processing, and subscription cancellations. The company reports over $10 million in signed annual recurring revenue, growing from zero to eight figures in ARR in its first year.

Technology and architecture

Its agents are built on top of foundation models from OpenAI, Anthropic, and Cohere, layered with company-specific data from help centers and historical conversations. In 2025, Decagon partnered with ElevenLabs to launch voice agents for more natural, human-sounding conversations.

The platform excels at fast automation. But its architecture prioritizes speed and autonomy over collaboration — often favoring self-contained resolution instead of context sharing or deeper operational integration.

Decagon’s key features and capabilities

Decagon's core features center on autonomous ticket resolution using LLM-powered natural language understanding. These capabilities help enterprise teams automate routine support tasks and scale digital service without adding headcount. The platform offers several features aimed at improving ticket resolution speed.

Context-aware responses

Context-aware responses mean customers get answers that feel tailored to their specific situation, not generic scripts. Powered by LLMs and proprietary NLP models, Decagon generates human-like replies in real time by drawing from past interactions, customer data, and conversation history. That enables more natural interactions and reduces the need for repeat questions or rigid flows.

Continuous learning from interactions

Decagon’s AI agents are designed to improve over time. As the system engages with more conversations, it uses those interactions to refine its intent detection, improve prompt grounding, and learn how to handle nuanced or previously unseen queries. This feedback loop enables the AI to get better at resolving issues autonomously without needing constant manual updates.

Scalable omnichannel support

Omnichannel support means Decagon can handle customer conversations across web chat, email, and voice — all from a single platform. The system manages these interactions simultaneously and transfers conversations between channels with continuity, maintaining context across touchpoints.

Actionable insights and analytics

Analytics and insights come through tools like Watchtower, which track AI agent performance including resolution rates, fallback rates, and retraining needs. But these insights remain limited to the AI layer — they don't show how human agents and AI work together or how performance trends across your full support team.

Who are Decagon’s customers?

Decagon's customers are fast-scaling, digital-first companies — typically in tech and consumer sectors — that need to automate high support volumes quickly.

Notable customers

Its customer base includes prominent tech and consumer brands such as:

Notion

Duolingo

Substack

Bilt

Rippling

ClassPass

These organizations prioritize speed, scale, and operational efficiency — making them well suited to Decagon’s automation-first approach.

Common customer traits

These businesses typically share a few key characteristics:

High interaction volume across chat, email, or in-app channels

Repeatable workflows that lend themselves to automation

Cost pressure to reduce headcount and increase agent productivity

In these environments, Decagon shines by deflecting routine inquiries, which is a significant opportunity since research shows 50% to 60% of customer interactions remain transactional. One customer, Bilt, reportedly reduced its support headcount from hundreds to just 65 using Decagon. ClassPass uses its agents to handle over 2.5 million customer conversations, reducing cost per reservation by 95%.

Where customer needs outgrow Decagon

But as support environments expand across geographies, channels, BPOs, and SLAs, Decagon's standalone architecture shows limitations. Teams needing tight coordination between AI and human agents often struggle with gaps in routing, context transfer, and real-time workforce visibility.

The lack of deeper workforce management integration or flexible escalation logic hinders support quality and slows resolution. That friction becomes costly when customer expectations are high and tolerance for mistakes is low.

Common Decagon use cases

Common Decagon use cases center on automating high-volume, repetitive support tasks: password resets, billing questions, account access, and shipping updates. The platform deflects these tickets from human agents by delivering contextual answers, surfacing help center articles, and managing basic troubleshooting workflows. That makes it valuable in fast-moving environments where rapid response times are critical.

Common use cases

Automating routine inquiries

Decagon can resolve straightforward queries related to billing, account access, shipping updates, and password resets. By handling these questions autonomously, it helps teams reduce backlog and improve time to first response.

Managing peak demand periods

During seasonal spikes or product launches, Decagon absorbs overflow volume across chat and email. But the lack of real-time staffing alignment or smart routing can create bottlenecks when agent escalation is needed.

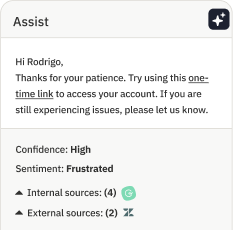

Real-time contextual assistance

The platform uses conversation memory and historical context to personalize replies, enabling a more fluid and human-like experience. This helps reduce customer frustration when navigating multi-step issues.

Sentiment detection and tone management

Decagon can identify when a customer is confused or frustrated and adjust tone accordingly — or trigger fallback logic to escalate to a human. However, that escalation process isn’t always smooth.

Complex workflows like refunds and returns

Even seemingly simple tasks like refunds aren’t always straightforward — especially for businesses managing loyalty programs, product categories, return conditions, and internal approval rules. What looks like a basic request often requires pulling data from multiple systems and applying nuanced logic.

Decagon may handle the front end of these requests, but it often falters when real complexity kicks in. Assembled, by contrast, supports hybrid workflows and smart handoffs that ensure nothing slips through the cracks — no matter how edge-case the request.

Support doesn’t run in a vacuum — it runs on messy systems, shifting workflows, and real human expectations. Decagon’s LLMs may be strong, but without deep operational orchestration, even simple tasks can fall apart in production.

What looks seamless in a demo often struggles in the wild, especially when teams rely on brittle integrations or lack the context to coordinate effectively between AI and human agents.

Decagon pricing

Decagon pricing is not publicly listed, but the company reportedly offers two usage-based models:

Per-conversation pricing: A flat rate for every AI-handled interaction, regardless of the outcome

Per-resolution pricing: A higher rate, charged only when the AI agent fully resolves the customer's issue

The second model is positioned as more performance-driven, but in practice, most teams default to the per-conversation model. It’s easier to explain internally, more predictable to forecast, and avoids the murky work of defining what counts as a “resolution.” (Even Decagon notes that this can be up for debate.)

That flexibility might seem appealing, but it doesn't eliminate uncertainty. Decagon doesn't publish baseline rates, usage thresholds, or real-world cost examples — making it hard for support leaders to estimate spend, especially during volume surges or seasonal spikes.

Because both models are usage-based, costs can compound quickly without clear guardrails.

Why that matters for support teams

For teams managing tight budgets, shifting staffing models, or executive pressure to prove ROI, this kind of unpredictability adds risk. Even if automation promises cost savings, those gains are hard to validate when pricing is opaque and spend varies based on conversation volume or loosely defined success metrics.

By contrast, platforms like Assembled offer AI pricing that is transparent, scalable, and grounded in real operational outcomes rather than raw usage. Teams get clear visibility into both AI and human performance, direct integrations with tools like Zendesk and Salesforce, and pricing that scales with actual value delivered. That makes it easier to right-size investments, forecast spend, and prove ROI — without relying on vague seat licenses or flat fees.

When your support operation runs on complexity, you need more than flexible pricing — you need pricing that’s predictable, measurable, and built to scale with your business.

Where Decagon falls short

Decagon's limitations center on operational scale, human-AI coordination, and workforce readiness. While the platform automates customer interactions effectively, it shows real gaps when support teams need to manage unpredictable demand, blended workforces, and high service expectations. Those gaps create real risks for enterprise operations.

Limited workforce management integration

Limited workforce management integration is Decagon's biggest operational gap. The platform was built to resolve tickets rather than to run entire support operations. It doesn't offer native integrations with workforce management tools or staffing systems, making it difficult for CX leaders to connect AI activity with real-time agent coverage, scheduling accuracy, or intraday performance.

This disconnect creates areas of unawareness — especially in environments where human agents, BPOs, and AI agents are all in play.

That also limits how quickly teams can identify new automation opportunities. Without visibility into agent queues, case timelines, or resolution patterns, it's harder to spot the low-hanging fruit — the kinds of tasks that AI can reliably take on without hurting quality.

In contrast, Assembled gives teams deep insight into queue-level performance and case lifecycle data. That means support leaders can see which tickets agents resolve fastest, which queues are most repetitive, and which workflows are already handled by Tier 1 or BPO teams — all strong signals for automation readiness.

It’s the benefit of having workforce management and AI built into one platform: unified data, a single UX, and shared intelligence that accelerates both efficiency and innovation. Instead of guessing where automation might help, teams using Assembled can target it precisely — and measure its impact in real time.

Challenges with scalability during demand surges

Challenges with scalability during demand surges emerge when Decagon faces peak load. The platform promises fast responses and high resolution rates, but several reviewers report performance degradation during high-volume periods — including slower response times, unanticipated AI errors, and the need to route more tickets back to human agents than expected.

Gaps in AI-human collaboration

Decagon’s design centers on autonomous resolution, but it can fall short when customer conversations require a smooth handoff to human agents. In some cases, support teams have noted challenges with passing full context during escalations, resulting in customers repeating themselves or agents having to backtrack. Delays can also arise from unclear escalation paths or handoff logic.

This lack of seamless collaboration between AI and humans can frustrate customers and add drag to the support process, especially since many, including 71% of Gen Z, believe live calls are quickest for explaining complex issues. In contrast, modern support operations require tight AI-human alignment, where agents are empowered with full context and can step in without losing the thread.

Alternatives to Decagon

Alternatives to Decagon exist for support teams that need more than standalone automation. While Decagon is a visible name in AI-powered support, it isn't the most complete option for organizations managing complex operations. For teams that need trustworthy automation with operational depth, Assembled AI agents offer a more flexible, integrated path forward.

Assembled’s AI agents are built on top of a workforce management foundation, which means they don’t just resolve tickets — they help run your entire support operation more efficiently. From omnichannel orchestration to real-time performance analytics, Assembled’s approach fills in the gaps where standalone AI platforms like Decagon fall short.

Where Decagon automates, Assembled orchestrates. In complex support environments, orchestration provides more leverage than simple automation.

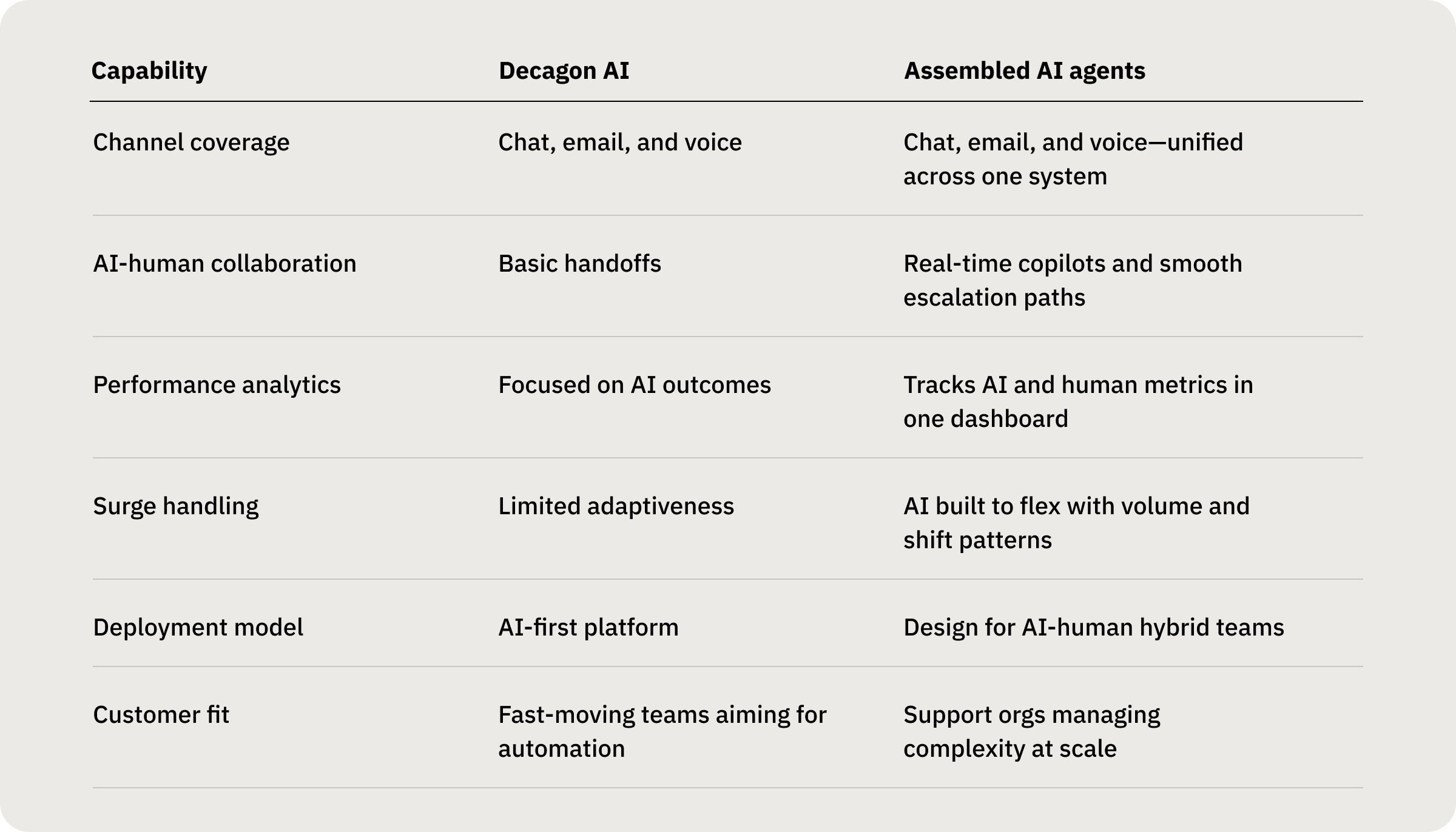

Key differences between Decagon and Assembled AI agents

If you’re evaluating top alternatives to Decagon AI, here’s how Assembled AI agents stack up.

What to look for in a Decagon alternative

When evaluating alternatives, consider these three essential capabilities:

Omnichannel excellence

Omnichannel excellence means AI agents can handle conversations across voice, chat, and email with full context continuity. Modern support isn't limited to a single touchpoint — customers expect consistent service wherever they reach out. Assembled AI agents unify these conversations so nothing gets lost in translation and customers get high-quality responses no matter which channel they choose.

Comprehensive analytics

Comprehensive analytics show both AI and human performance side by side, detailing how AI resolutions impact team SLAs, agent productivity, and overall support quality.

Adaptability during surges

Adaptability during surges means AI that adjusts to volume fluctuations without breaking down. Assembled's AI agents are built to handle unpredictable demand, hand off to human agents intelligently, and scale without sacrificing response quality. Whether you're facing seasonal spikes or a surprise campaign, your support quality stays consistent.

Unlock better results with Assembled AI agents

Decagon has made a name for itself in the AI support space — and for good reason. Its generative models handle routine queries with speed and accuracy. But for support teams that need more than just automation, the gaps quickly show: no deep workforce alignment, limited analytics across agents and AI, and rigid escalation flows that can break under pressure.

Assembled AI agents fill those gaps with a fundamentally different approach. By combining LLM-powered automation with real-time orchestration, Assembled helps teams not just respond faster, but operate smarter. From intelligent routing to human-AI collaboration to built-in performance tracking, it’s everything modern support teams need to stay agile, efficient, and customer-first.

Don’t settle for isolated AI. Book a personalized demo to see how Assembled can power your entire support operation — from first message to final resolution.

Frequently asked questions about Decagon AI

Is Decagon AI legit?

Yes, Decagon is a legitimate enterprise AI platform backed by $231 million in funding from Andreessen Horowitz and Accel, valued at $1.5 billion. The company serves major brands including Notion, Duolingo, and Rippling, with over $10 million in signed annual recurring revenue.

Who is the CEO of Decagon AI?

Decagon was co-founded in 2023 by Jesse Zhang and Ashwin Sreenivas, who serve as the company's leadership team.

How long does Decagon implementation take?

Implementation timelines vary by complexity, but Decagon positions itself as a fast-deployment solution. However, teams should expect several weeks to months for proper integration with existing systems, agent training data collection, and workflow customization.

Can Decagon integrate with existing support tools?

Decagon integrates with major customer service platforms including Zendesk, Salesforce, and similar helpdesk systems. However, it lacks native workforce management integrations, which can limit visibility into how AI and human agents coordinate in real time.

What happens when Decagon can't resolve an issue?

Decagon uses fallback logic to escalate conversations to human agents when it can't resolve an issue. However, context transfer during these handoffs isn't always seamless — which can force customers to repeat information and agents to backtrack through conversation history.